January quick-takes

Recap of my short posts on LinkedIn and elsewhere in January

Truly Open Source AI: OLMo 3 and Nemotron 3 Nano

The past few weeks have been good for open source AI, with the recent releases of Olmo 3 (by Ai2) and Nemotron 3 Nano (NVidia).

Both are truly open source: not only the weights (needed to “run” the model, akin to compiled code), model reports, and the source code, but also the training data and code required to re-train them from scratch, under a permissive license.

Olmo 3 comes in 7B and 32B sizes and several variants: Base, Instruct (focus on quick responses, multi-turn chat, instruction following, tool use), Think (long reasoning chains of thought), and RL Zero (reinforcement learning directly on top of the base model.

Nemotron 3 Nano is a Mixture-of-Experts (MoE) model with 3.2B active, 32B total parameter model (30B-A3B).

Both perform better than Qwen3 32B-A3B and GPT-OSS 20B (which are not fully open – just have the weights available).

What does this mean for you?

If you're an LLM researcher, this is super-useful information, data, and insight – and you probably already know everything about these releases!).

If you're an FOSS enthusiast, rejoice at truly open models becoming viable for everyday use (even if they fall short from the big guns).

If you use local AI, you probably don't care about all the training details, but you do get more options for running on premises or on-device, which is always good.

If you're using AI products or integrate with LLMs over API, you probably don't care much, but I hope the post was interesting.

If you're none of the above – I mean I appreciate it and am thankful, but why are you reading this?! Do let me know in the comments why, I'm curious :)

(As a software developer, I couldn't write the above English-language SWITCH statement without a default/else catchall block... devs will understand).

AI Model Releases: Gemini 3 Flash, GPT Image 1.5, SAM Audio, and SHARP

A bunch of interesting AI model releases this week! If you've got the need for (AI) speed, want to create images, do 2D->3D or analyze audio, here's new stuff to play with:

Google released Gemini 3 Flash, a speedier version of its (also recently released) Gemini 3 Pro. By all accounts, it's quite good while being noticably faster and 4x cheaper.

OpenAI released GPT Image 1.5. While not as powerful as Gemini Image Pro (a.k.a Nano Banana Pro), it's a significant improvement over the previous GPT Image version. My personal favorite is the “graphite pencil sketch” preset. Already integrated in ChatGPT.

Meta released SAM Audio, an Audio-capable variant of their Segment Anything Model that can now also pinpoint and isolate audio samples. I was impressed by the ability to click on an object on the video to isolate its sound (integration of video and audio segmentation).

Apple releases a fast on-device vision model, SHARP, that can take a single image and produce a (faux) 3D view suitable for eg. 3D vision goggles in real time. While it doesn't do a 3D scene reconstruction (like MAST3R & friends), it's probably useful where you want to have (subjective) 2d-to-3d effect, fast, on-device.

OpenAI Launches ChatGPT App Store with MCP-Powered Plugins

OpenAI has opened its ChatGPT app store for outside submissions. ChatGPT apps are plugins (widgets) that users can tag and use directly from the conversation without leaving ChatGPT.

Apps are MCP-powered and represent a second try on the “Custom GPTs” attempt from a few years ago that hasn't become very popular. Beyond the usual MCP goodies, apps can insert (simple) UI (widgets) right into the chat.

To use an app within ChatGPT, you need to “connect” it with using the standard OAuth authorization flow, after which you can @ tag them in the chat.

For developers, OpenAI has published some guidelines on building good experiences.

It'll be interesting to see if this grows into a full app store in the future. Maybe with payments? Monetization is one of the unsolved problems for ChatGPT. Charging a fee or % is an obvious move, though it isn't obvious if that would really work and move the needle.

So far, the new ChatGPT Apps feature looks like a promising start.

Andrej Karpathy's 2025 LLM Year in Review

Andrej Karpathy posted a 2025 LLM year in review.

Andrej listed a few of notable and mildly surprising paradignm changes according to his (very well informed!) opinion:

- Reinforcement Learning from Verifiable Rewards (RLVR) – the next scaling frontier

- Jagged Intelligence – we're not reimplementing human/animal intelligence, so the strengths and weaknesses don't match – it's just different

- New layer of LLM apps – “LLM wrappers” like Cursor show there's a lot of value in properly orchestrating and integrating the LLMs for a specific vertical

- AI that lives on your computer – Claude Code as the first convincing demonstration of what a real AI agent looks like

- Vibe Coding – will terraform software and alter job descriptions

- LLM GUI – chat interface is the worst computer interface, but we're still learning how to do better

Andrej's articles (& videos) are always worth a read (& watch) and this one is no exception. If you haven't been following the AI hype closely (tell me how you managed that feat!), this is a great no-nonsense overview.

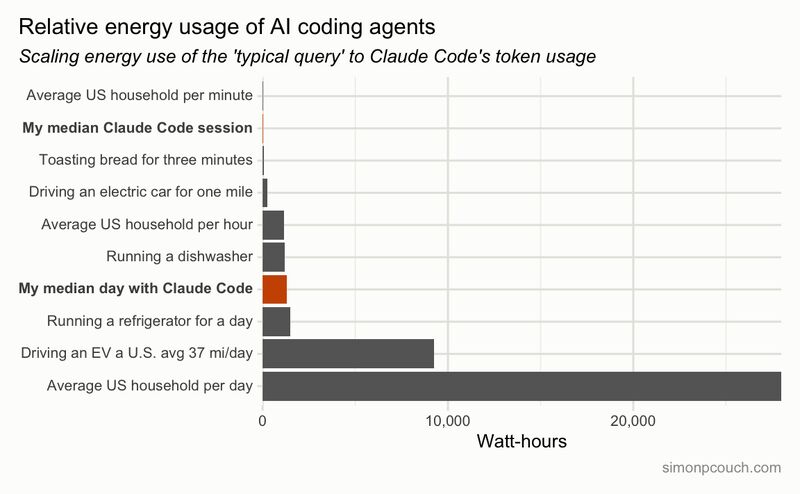

How Much Energy Does AI-Assisted Coding Consume?

How much energy does a typical AI coding session consume?

Typical AI query is estimated to consume ~0.3Wh, but agentic coding is far from typical AI chatbot query, spending 10x-100x more tokens (and energy).

In a great post diving into the actual costs, the author analyzes his typical coding session and typical day (full day of coding, several agents in parallel) to arrive at 1.3kWh per day of work.

I ran the calculation with my own numbers and came up at ~18kWh per month, but that's not full time use – a few hours per day, and not every workday.

The cost is not insignificant, but I'd say it's comparable to using other useful technology such as dishwasher or fridge. In my case at least, the cost (in dollar and kWh terms), is worth it.

Google's Search Monopoly and the Web Crawling Problem

A perspective from Kagi (alternative, paid, no-ads search engine) on how Google keeps its search monopoly, why it's bad, and what to do about it.

A few days ago I wrote an article on a similar topic – how AI scraping is a problem because of the lack of unified common public corpus and shared some ideas on how to solve it.

In both cases, the technical challenges, while formidable, are solvable. The blocker is the legal framework and practice. In a nutshell, Google used a ladder, then kicked it down, and nobody can follow.